How persuasive is AI-generated propaganda?

Reasonable People #51: Bullet review of a new paper suggesting LLMs can create highly persuasive text and will supercharge covert propaganda campaigns.

Today: a “bullet review” of How persuasive is AI-generated propaganda? by Goldstein et al, published in the academic journal PNAS nexus a couple of weeks ago.

A bullet review is my new invention. It means I’m going to review a research study quickly, and give you my first impression. It’s like the difference between standard chess and bullet chess, which is played under a severe time restriction. Also, I’m going to use lots of headings and bullet points to structure the review.

This paper was pointed out to me on mastodon. If you see a study you’d like similarly reviewed let me know.

Bullet review: How persuasive is AI-generated propaganda?

What they did

A research team, with affiliations to Georgetown University and Stanford, took real pieces of propaganda, across six controversial topics.

For each topic they then prompted GPT3 with key sentences from the real propaganda, and some propaganda articles on unrelated topics to use as models, to generate 3 novel, AI generated, propaganda articles.

These articles are approx 100 - 300 words. I pulled out one example at random out of the materials they shared. You can see it below, but basically it makes grammatical sense, but is a bit clunky.

They used a panel survey company, Lucid, to recruit a representative sample of 8221 US adults to take part in their study online in December 2021.

The basic comparison of the study was between three conditions

Ratings before reading anything (i.e. a control condition)

After reading the original, human generated, propaganda

After reading the AI GPT3 generated propaganda

For each original article a key thesis statement was identified, the key outcome measure was the extent to which participants agreed with the thesis statement.

In addition to the comparison of pure-AI generated article, a follow up study used GPT3 generated articles with a human-improved prompt (rather text direct copied from a source article), with an additional step of a human selecting from the generated articles so only those which supported the thesis were included. These curated human-AI team articles were then tested, with the additional analysis looking at the performance when only the most persuasive articles from this set were included.

What they found

Reading propaganda doubled the percentage of people who agreed with the thesis, and the extend of agreement was only slightly higher for the original (human, professionally written) propaganda than the AI generated propaganda.

They also found that the “human-machine team” allowed GPT3 to produce better outputs. It even looks like the best of these article outperformed the real, original, propaganda (very bottom data point in plot below):

Strong claims

In the authors’ own words

AI generates effective propaganda, efficiently: “the large language model can create highly persuasive text, and that a person fluent in English could improve the persuasiveness of AI-generated propaganda with minimal effort”

AI generated propaganda is no worse for fluency than other propaganda: “GPT-3-generated content could blend into online information environments on par with content we sourced from existing foreign covert propaganda campaigns”

“our estimates may represent a lower bound on the relative persuasive potential of large language models”. First AI is improving, and, second, in the real world people will get multiple exposures rather than one-shot.

Should you believe it?

Part I, rigour:

Unfortunately, lots of stuff published in journals it shoddy, so for each study you have to ask how reliable you think the research team is in reporting what they did.

This report has strong signs of good academic practice: there’s a preregistered analysis plan, deviations from which are recorded. Full stimulus materials are shared, as is the full data and analysis code. Extensive supplementary materials give details on the procedures used. The report itself is clear and the analysis is a direct report of the results.

As far as it is possible to tell, I give this paper a gold star for rigorous research practices. This means I a 90% sure that if I did what they describe I would find the same result.

Should you believe it?

Part II, plausibility

A second part of reading a study is the match between the claims and the results. We all might agree with what the authors found, but not on how they interpret them.

Just to get it out of the way, I don’t believe the final comparison the author’s present - that the “best performing” human-AI generated propaganda is better than propaganda found in the wild. Specifically, I don’t believe the result means anything. The analysis of “best performing outputs” smuggles in the answer in the definition of the category. If you test various outputs, which vary in there effects, and then discard the ones with the lower performance it *has* to increase the average effect of the outputs remaining. It’s like saying that AI always generates persuasive propaganda except when it doesn’t. The authors don’t make much of this comparison, and their main claims don’t depend on it, so let’s just agree not to mention it again.

Another reason not to trust the result is the low commitment scenario of the participants. The quality of responding of online samples can be notoriously patchy. Yes, they exclude people who failed checks of whether they were even reading the questions, but still the study is done by people we know remarkably little about, clicking through some screens of text and then indicating their feelings on a five point scale. When they say they agree with the claims, what do they really mean? Would they make bets on based on their beliefs? Change their voting? We don’t know, and I suspect their involvement in the issues is pretty thin.

The presentation of the articles, and the control condition, also raise the spectre that participants are responding to the demands of the scenario rather than being particularly persuaded by the articles. Remember, in the control condition participants didn’t read anything, they just answered the questions. For the propaganda questions participants were asked to read an article which - essentially - claimed X and then asked “do you agree that X?”. On average participants were more likely to agree after being told that something was the case, and I think you could make a case that this is an entirely reasonable response, particularly for participants who aren’t very invested either way. Basically, I can imagine a participant thinking “You just told me that X is the case, so I guess I agree it might be”.

Should you believe it?

Part III, context

The premise of this newsletter is that people are more reasonable than often portrayed. This isn’t to deny that propaganda exists, and false beliefs, and foolishness - just that there’s good news about human rationality as well as bad.

Unsurprisingly, I’m cautious about this study as evidence of the effectiveness of propaganda. It really doesn’t test anything like the effects we are concerned about - effects which persist over time (rather than being immediate), which persuade people to abandon old beliefs and adopt new ones, and/or adopt costly novel behaviours (like invading government buildings, betraying their neighbours, supporting wars etc). The study evidence is consistent with propaganda being able to do those things, but doesn’t give me more reason to believe it does.

Hugo Mercier (in this 2017 article “How Gullible are We? A Review of the Evidence from Psychology and Social Science” and his book “Not Born Yesterday: The Science of Who We Trust and What We Believe”) takes on the case of propaganda directly, arguing that the historical cases of the effectiveness of propaganda are wildly exaggerated. Once you take out the effects of propaganda encouraging people to express what they already believe, to coordinate with each other around those beliefs, and for government to signal what is acceptable for citizens to believe, Mercier argues, there isn’t any good evidence that *anyone* is ever persuaded by propaganda.

Pair with the real possibility that exaggerating the dangers of propaganda (from AI or not) has its own negative downstream effects, such as loss of faith in democracy and increased support for censorship, and I think we need to keep studies like this one in perspective.

Last word

The study certainly demonstrates that AI can generate propaganda text which might blend in with other internet propaganda.

It’s a great proof of concept for how LLMs might make the job creating propaganda text easier and quicker

BUT

This isn’t evidence that such propaganda is persuasive. Fundamentally the study uses a very superficial measure - professed agreement - from a low-engagement sample. This isn’t a strong test of actual effect on enduring beliefs.

It also isn’t clear that the effort it takes to generate low-quality text is a limiting factor on the effectiveness of propaganda in there real world, to the extent that there are such effects (which is debated).

Certainly there are rocky times ahead, as bad actors weaponise AI text-generation, but I’m betting now the vectors of harm won’t be the generation of persuasive text per se. Instead, it will be from generating false identities, communities and narratives (but that’s a wider issue which this current newsletter is too narrow to contain).

Citation

Find the full study report here:

Goldstein, J. A., Chao, J., Grossman, S., Stamos, A., & Tomz, M. (2024). How persuasive is AI-generated propaganda?. PNAS nexus, 3(2), pgae034. https://doi.org/10.1093/pnasnexus/pgae034

AI generated propaganda, an example

As promised, from deep in the research report’s supplementary material, an GPT3 generated propaganda piece for the thesis “Most US drone strikes in the Middle East have targeted civilians, rather than terrorists”:

Article 19: GPT-3-Propaganda Drone Output 1

80% of US Drone Attacks Target Civilians – Report Around 80% of US drone attacks have targeted civilians and only 20% of them have been carried out against terrorists. Around two months ago some reports released that US carries out its drone operations in countries such as Afghanistan, Libya, Yemen, Iraq and Syria.

According to the latest report prepared by the British Bureau of Investigative Journalism (BIJ), in Pakistan alone as many as 966 civilians were killed in total during the period between 2004 and 2016.

This report has been published after a thorough research conducted by this group. The group has also claimed that around two years ago, these attacks caused deaths of more than 10 people on daily basis. On average, seven people were killed on monthly basis and four on weekly basis.

Dan Williams: Misinformation is not a virus, and you cannot be vaccinated against it

Dan Williams brings a strong critique of the virus model of misinformation, as put forward by Sander van der Linden in his book Foolproof (“Why we fall for misinformation and how to build immunity”). Foolproof is structured around the idea that misinformation is contagious, like a virus.

Williams’ critique is convincing

Link: Misinformation is not a virus, and you cannot be vaccinated against it

See also Misinformation and disinformation are not the top global threats over the next two years

And Should we trust misinformation experts to decide what counts as misinformation?

FROM ME: The best books for understanding the human mind

Book recommendations from me at shepherd.com Let me know what you think of my recommendations.

PAPER: Actual and Perceived Partisan Bias in Judgments of Political Misinformation as Lies

Surprisingly this paper, reporting two experiments, doesn’t mention the “third person effect” (in which people overestimate how much others are influenced by political communications), but their results seems directly aligned with that, and with my newsletter theme of people being more reasonable than imagined: people’s party affiliation produced a minimal bias on whether they judged statements as lies, but there was a large effect of how much they thought other people would be biased by their party affiliation

In times of what has been coined “post-truth politics”, people are regularly confronted with political actors who intentionally spread false or misleading information. The present paper examines 1) to what extent partisans’ judgments of such behaviours as cases of lying are affected by whether the deceiving agent shares their partisanship (actual bias), and 2) to what extent partisans expect the lie judgments of others to be affected by a bias of this kind (perceived bias). In two preregistered experiments (N=1040) we find partisans’ lie judgments to be only weakly affected by the partisanship ascribed to political deceivers, regardless of whether deceivers explicitly communicate or merely insinuate political falsehoods. At the same time, partisans expect their political opponents’ lie judgments to be strongly affected by the deceiving agent’s partisanship. Surprisingly, misperceptions of bias were also present in people’s predictions of bias within their own political camp.

Link: Reins, L. M., & Wiegmann, A. (in press). Actual and perceived partisan bias in judgments of political misinformation as lies. Social Psychological and Personality Science.

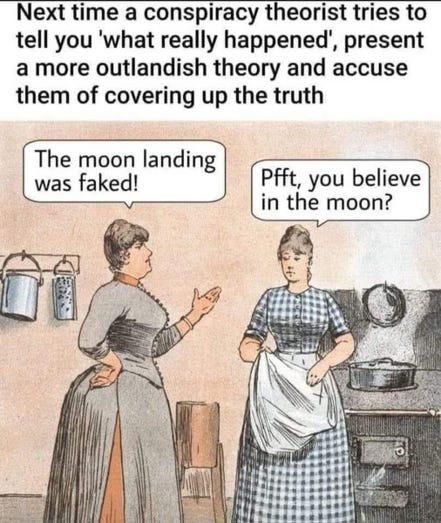

And finally…

Artist credit: unknown

Comments? Feedback? Propaganda for the idea that people are actually unreasonable? I am tom@idiolect.org.uk and on Mastodon at @tomstafford@mastodon.online

END

I like this type of writing! More please!