On the over and under detection of agency

Reasonable People #39: Why we may overestimate chatGPT, and why smarter people may be particularly prone to this

Again, a newsletter about the reflection of human psychology that is provided by the mirror of large language models. Catch up on part 1: Artificial reasoners as alien minds, part 2: The tuned stack

Hard on the heels of chatGPT 3, chatGPT 4 has arrived. These are members of the family of Large Language Models (LLMs), algorithms which, trained on massive corpora of human-produced writing, absorb the statistical structure of our textual worlds and echo back to us novel, but plausible, combinations of words. Somehow, out of the basic principle that “Bacon and” is plausibly followed by “eggs”, deep learning conjures apparent agents that do a bewildering variety of things - pass exams, write code for games, generate birthday gift ideas (he loved it, btw).

My friend M. is my anti-hype man. He’s done research and applied work using machine learning models, and inoculated himself to tech hyperbole through years of industry experience. I go to him for grounding when some new technology is offered as a universal solution or massive breakthrough. I look to him for a measured take, for nuance. For calm. Late at night, after chatGPT 4 was released, he messaged me just to say it was “nutso bonanzo”. His summary for the way we currently understand and operate in the world: “It’s all over”.

This is like the Pope messaging me to say that God is Dead.

I don’t want to deny that LLMs are incredibly, dizzyingly, impressive; nor that they will disrupt many current practices, but I want to talk about a way in which models like chatGPT may be overestimated, and in particular may be overestimated by some of the most informed, smartest, individuals. I would say “a way in which they trick us into overestimating them”, but that would be falling for exactly the misdirection I am going to argue is at work.

The construction of conscious will

Dowsing is the divination practice where you hold two rods (either parts of a split twig or pieces of stiff wire) and they move as you pass over water. The essential facts about dowsing, as I’m going to tell it are:

It is impossible - there is no magic force projected by water which can make the dowsing rods move.

It works - people can reliably use dowsing to find water. My friend D. told a surpring story about the time, after buying his house, the man from Yorkshire Water arrived to find a missing outflow pipe in the front yard. For those of you not familiar with Yorkshire Water engineers, think, essentially, of the anti-hippy - as far away as you can get from fey-spirited and tie-died as possible. The engineer tried a few things to locate the pipe and then, with a gruff comment to the effect of “don’t laugh, it just works okay” went back to his van to fetch the dowsing rods (and, of course, used them to immediately find the pipe).

People swear *they* are not deliberately moving the rods.

The psychology of this is the ideomotor effect, whereby we generate movements but don’t know we are doing it. It’s the same phenomenon behind the Ouija board and is exaggerated in situations where we have plausible confusion over the source of a movement (e.g. random noise is large, or other people are involved), or where the consequences are outsized compared to the initiating movements, or both. With dowsing, the smallest movements in the hands are exaggerated, allowing the rods to swing back and forth seemingly under their own power.

There’s fun demonstration of the ideomotor effect you can try yourself now - take a string and a small weight (a ring, a button on a thread, whatever is to hand). Mark a point on a piece of paper and hold weight above the point. Now try your hardest to keep your hand absolutely still, but at the same time ask yourself a yes/no question (“Will this work?”, “Do I need coffee?” anything will do). Keep holding your hand still, but at the same time know that the button will start to swing clockwise for “YES” and anticlockwise for “NO”.

It works! The weight will swing, and you’ll have the impression that you are holding your hand still, but tiny movements on your hand - exaggerated by the effort of holding your arm out, and amplified as the momentum of the swing builds up - generate a YES/NO answer.

Now think how you’d feel about this if you were a inclined to believe in unseen forces and had been told to do it by a dowser rather than a reductionist cognitive scientist.

The ideomotor effect shows that even at the most fundamental level, the control of our own bodies, we’re capable of miscalibration - we can cause things but not know we are causing them1.

Illusions of epistemic depth

In the realm of reasons we can also be miscalibrated. The Illusion of Explanatory Depth is the phenomenon where people think they understand something - like how a flushing toilet or a bicycle mechanism work - but don’t. Anyone who has ever taught knows the reality of this phenomenon - it is often when you come to try to tell someone else how to do something that you realise how badly you understand it yourself. Part of the explanation offered by the original authors (Rozenblit & Keil, 2002) is that the fluidity with which we interact with everyday mechanisms can mislead us into mistaking ease of use for understanding.

The relevance for large language models is that - unlike most computers - they use one of our most natural interfaces: language. Our instinct is to talk and listen, write and read, and LLMs can jump into this loop without requiring any adjustment from us. This makes it easy to use LLMs as a tool without taking full account of just how much we put into them in the choice of prompt, in the questions and, follow up questions we ask and the selection of answers we make.

One form of this is the raw selection bias that the best answers from chatGPT are most likely to be shared, or users are likely to keep trying until the get a good answer (a variety of optional stopping, a known bias generating mechanism in formal experimentation). Another form is that users rapidly hone in the useful, important or interesting parts of a chatGPT answer, perhaps discarding the irrelevant or unhelpful parts of answers so easily they don’t even realise it.

In the first paragraph, I credited chatGPT with the ability to pick a great birthday present, but looking back at the queries I made, you can see that chatGPT made a bunch of suggestions and I honed in on one to ask a follow up question about. And then it was me who decided that the reply to the follow up was good enough to implement and actually make the purchase. And maybe if that follow up didn’t satisfy me I’d have asked others, and so on, until I got a response that I liked. Here I am feeding extra information to chatGPT, selecting for wins and discarding many possible directions we could have taken in the garden of forking paths which wouldn’t have produced “winning” answers.

Last week, AI-luminary Terry Sejnowski of the Salk Institute, posted on the Connectionst listserve2 an example of chatGPT 'showing' causal understanding. The test was done in response to Chomsky's claim that this was something a LLM couldn't do. Here's Terry's test

Me: If I hold an apple in my hand and let it go what will happen?

GPT: The apple will fall to the ground due to the force of gravity.

Me: What about any such object?

GPT: Any object released from a person's hand will fall to the ground due to the force of gravity.

Me: What would happen if there wasn't a force of gravity?

GPT: If there were no force of gravity, objects would not be able to stay on the ground and would float away in all directionsNow Sejnowski is writing in conversational mode, to a discussion list, so I don’t want to overweight the claims he might be making, but I think what he’s saying is that, contra Chomsky, chatGPT can appear to offer correct causal explanations (which, of course it can - since it is trained on the corpora of human text in which people write about causal explanations all the time) and, so, contra Chomsky, being able to offer correct explanations is not a good test of understanding. A very enjoyable discussion ensued on the mailing list. Some pointed out that it was possible to get the opposite results - incorrect explanations - quite easily from chatGPT, but I want to focus on the understanding that Sejnowski himself put into the interaction. Not only did he formulate the original question in a particular way, but he asked two specific follow up questions which provided the opportunity for chatGPT to respond with correct, “understanding demonstrating” answers. It is no exaggeration to say that Sejowski is a world-leading expert in the domain of machine intelligence, yet, in this moment he seems to neglect the role his own intelligence plays in eliciting strong, intelligence-seeming, answers from the LLM.

I see the same thing when people post about chatGPT being able to write code. The hard thing in coding is knowing where to start, or what the thing you are stuck on is called so you can search for an answer. We take for granted the computational literacy that allows us to make the hundred little choices of what to attend to, what to discard, when interacting with computers through code. The symbiont between an expert coder and chatGPT may be able to write code, but chatGPT in the hands of a complete novice would so brittle as to be close to useless.

chatGPT is certainly a force multiplier for writing code, but saying that it can be the author is to neglect the intelligence of the human operator. It may be that the strong coupling between human and machine encourages the very conditions which allow us to lose our sense of agency, to forget or ignore the inputs we put into the machine and so be delighted by the outputs. And more skilled operators can make more deft queries, and so elicit, more intelligent-seeming responses from the models.

Perhaps it is not surprising that so much of the conversation around chatGPT is about it substituting for human intelligence, rather than augmenting it, but I am convinced that augmentation is the more fruitful framing. The models are amazing, but they are ultimately derived from the accreted mass of working, human, intelligence in text and can only reflect back based on what we put into them, input which is again determined and driven by human intelligence and values.

References

More on the ideomotor effect in Chapter #80 of Mind Hacks

Rozenblit, L., & Keil, F. (2002). The misunderstood limits of folk science: An illusion of explanatory depth. Cognitive science, 26(5), 521-562.

Previously by me on chatGPT: RP #36: Artificial reasoners as alien minds, RP #38 The tuned stack

Financial Times, 2017-11-21 Engineers still practise water divining, say UK utilities ‘Medieval method with dowsing rods used to detect leaks and pipes by 10 UK groups’

more chatGPT / LLM

Any sufficiently advanced technology etc, and that for sure means that prompt engineering = the new shamanism: good post on ‘The Waluigi Effect’, which contains a nice framing on how to think about LLMs and particularly on how to use characters to evoke particular responses (and their opposites).

Stay up to date with ML events via the excellent Data Machina newsletter

On Generative AI, phantom citations, and social calluses. Dave Karpf has a sober take on the social effects of LLMs

"many AI systems are ... “cultural technologies.” They are like writing, print, libraries, internet search engines, or even language itself. They summarize and “crowd-source” knowledge rather than creating it. They are techniques for passing on information from one group of people to another, rather than ... creating a new kind of person. Asking whether GPT-3 or Dall-E is intelligent ... is like asking whether the University of California’s library is intelligent "

Children, Creativity, and the Real Key to Intelligence. Alison Gopnik, October 31, 2022

Since we’re running all this compute anyway…

Frontiers in data centre - swimming pool symbiosis: "the heat from the computers warms the water and the transfer of heat into the pool cools the computers."

lower carbon, cheaper data, heated swimming. Win

In other news…

A recording of my talk @ Centre for Educational Neuroscience, UCL "Maximizing the potential of digital games for understanding skill acquisition"

Key paper: Stafford, T., & Vaci, N. (2022). Maximizing the Potential of Digital Games for Understanding Skill Acquisition. Current Directions in Psychological Science, 31(1), 49–55. https://doi.org/10.1177/09637214211057841

I am a little embarressed by this talk because I scheduled it immediately after two hours of MSc teaching so I felt slightly deranged already going into it. I’ve no idea if you can tell, and obviously I’m not going to be able to face listening back to the recording to find out. Watch at your own risk!

And finally…

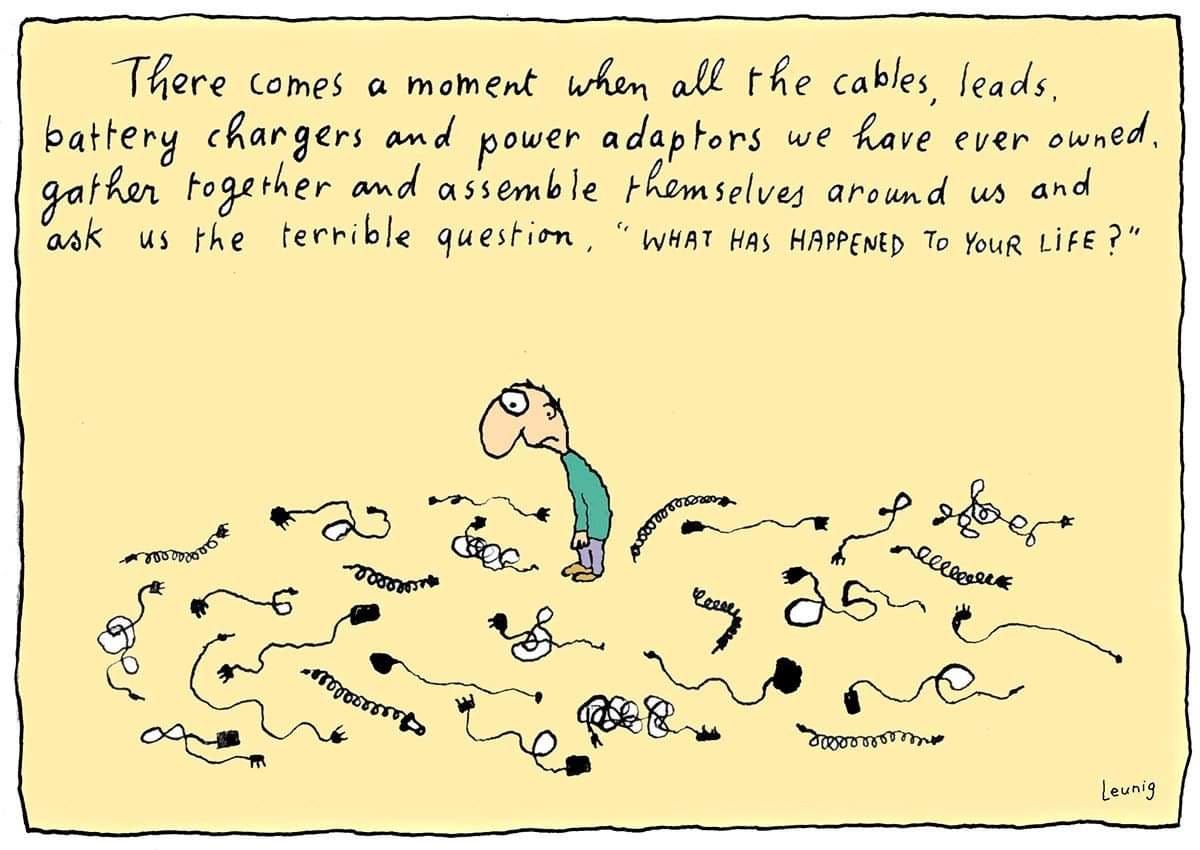

"There comes a moment...." Cartoon by Michael Leunig

END

Comments? Feedback? Gift ideas? I am tom@idiolect.org.uk and on Mastodon at @tomstafford@mastodon.online

In his book “The Illusion of Conscious Will”, Dan Wegner (2002) builds a case that *all* our experiences of self-causation are constructed, using the same fundamental mechanisms of inference, rather than directly perceived. It’s a compelling book, but I’ve not read it since I put on the critical spectacles of the replication crisis, so I would probably only give any individual study cited in the book a 50% chance (at best) of actually reporting a true effect, but where this leaves the overall thesis I’m not sure.

Yes, academic listserves are still a thing. There’s another newsletter to write about their ongoing role and the epistemic niche they fill.

Outstanding, thank you. It’s very hard to notice an intuitive attribution like human agency that is so much a part of the fabric of our daily social life. Very smart and knowledgeable people are just as prone to it as the less so. I’m strongly in agreement that this genre of technology is better framed as a potential enhancement to the human brains it builds upon than as a competing “intelligence.”

Interesting article.