Unhinged, unfortunate, unproven, inadequate, misdirected

RP#59 The bigger picture around Meta's announcement on fact-checking

Last week I reviewed Community Notes, the system Meta announced it would adopt for fact-checking from this point on. While I find positives in the Community Notes system, it is clear that alone it is an inadequate response to the problem of misinformation on social media platforms.

‘Unhinged’

Here’s Alexios Mantzarlis in his essential newsletter for anyone interested in fact-checking and misinformation, Faked Up:

…in which he describes Zuckerberg’s justification for dropping the industry leading Meta fact-check program as ‘unhinged’. More:

Zuckerberg justifying the termination of this program as a defense of free speech is particularly galling given that fact-checking labels did not lead to the underlying posts getting removed. Meta (rightly) took the approach of fact-checking as a contextual intervention which reduced a post’s reach but didn’t prevent users from continuing to access it.

‘Unfortunate’

And here’s Angie Drobnic Holan, Director of the International Fact-Checking Network (partnership with whom Meta just dropped):

Here's my statement as International Fact-Checking Network Director on Meta ending its US factchecking program: "This decision will hurt social media users who are looking for accurate, reliable information to make decisions about their everyday lives and interactions with friends and family. Fact-checking journalism has never censored or removed posts; it’s added information and context to controversial claims, and it’s debunked hoax content and conspiracy theories. The fact-checkers used by Meta follow a Code of Principles requiring nonpartisanship and transparency. It’s unfortunate that this decision comes in the wake of extreme political pressure from a new administration and its supporters. Factcheckers have not been biased in their work -- that attack line comes from those who feel they should be able to exaggerate and lie without rebuttal or contradiction."

Source: https://www.linkedin.com/in/angieholan/

Unproven?

The promise of the Community Note algorithm is that it can spin fact-check gold from highly partisan straw. The idea is appealing, but it surely must have boundary conditions. The information environment has bad actors - who can brigade together in crowdsourcing systems, manipulating things such as which sources are counted as trustworthy, and so by distorting inputs bias the outputs. Separately, there is also an open question (as far as I am aware) of how successfully the algorithm actually works in practice. Especially, since it wouldn’t be surprising if the crowd providing fact-checks on partisan information are themselves highly partisan (given the opt in is probably from some of the most-engaged, and so most polarised, users). Empirical work suggests this is the case :

(excerpt from the abstract)

we find that contextual features – in particular, the partisanship of the users – are far more predictive of judgments than the content of the tweets and evaluations themselves. Specifically, users are more likely to write negative evaluations of tweets from counter-partisans; and are more likely to rate evaluations from counter-partisans as unhelpful. Our findings provide clear evidence that Birdwatch users preferentially challenge content from those with whom they disagree politically. While not necessarily indicating that Birdwatch is ineffective for identifying misleading content, these results demonstrate the important role that partisanship can play in content evaluation

Reference: Allen, J., Martel, C., & Rand, D. G. (2022, April). Birds of a feather don’t fact-check each other: Partisanship and the evaluation of news in Twitter’s Birdwatch crowdsourced fact-checking program. In Proceedings of the 2022 CHI conference on human factors in computing systems (pp. 1-19).

(via Faked Up)

Inadequate

Here’s Alexios again with his take on crowdsourcing fact-checks. He clarifies that he is pro- crowd-sourcing, but skeptical of relying on crowd-sourcing alone for all your fact-checking needs.

no project outside Wikipedia has succeeded at delivering a broadly legitimate fact-generating crowdsourced institution at the scale necessary for the modern information ecosystem.

In the (paywalled) post Alexios steps back and reviews the conditions required to make a crowdsourcing system work. Recommended. The conclusion (I strongly endorse) is that crowdsourcing can be a useful component of information moderation system, but it should not be the only component:

It’s too bad that instead of building from the lessons of complementary instruments to bring context to disputed facts online, Mark Zuckerberg chose to hyperbolize the benefits of one and the criticisms of the other.

A sentiment echoed in this open letter to Zuckerberg from ‘the world’s fact checkers’:

there is no reason Community Notes couldn’t co-exist with the third-party fact-checking program; they are not mutually exclusive.

Misdirected

Here Meta just flipped the switch that prevents misinformation from spreading in the United States, Casey Newton in his Platformer newsletter reports on the other changes Meta has made along with ditching professional fact-checking:

Last week, Meta announced a series of changes to its content moderation policies and enforcement strategies designed to curry favor with the incoming Trump administration. The company ended its fact-checking program in the United States, stopped scanning new posts for most policy violations, and created carve-outs in its community standards to allow dehumanizing speech about transgender people and immigrants. The company also killed its diversity, equity and inclusion program.

Behind the scenes, the company was also quietly dismantling a system to prevent the spread of misinformation. When the company announced on Jan. 7 that it would end its fact-checking partnerships, the company also instructed teams responsible for ranking content in the company’s apps to stop penalizing misinformation, according to sources and an internal document obtained by Platformer.

The story here is that Meta has developed successful classifiers (machine learning algorithms) which can identify hoaxes and is simply turning them off (so far only in the US). Instead it will rely on an untested and, as yet undeveloped, alternative system.

A final reminder that the Community Notes aspect is only a small part of this announcement. It is hard to see how this will do anything other than further the decline of Facebook, at least, as a useful platform, especially with respect to news information (there’ll still be a incentive to use it to contact friends, family or community groups for all those of the wrong age to be invested in other platforms).

In other news

De-bunking Pre-bunking

Love this line from Mike Caulfield

Not sure if I’ve said here how much I really hate the term “pre-bunking”, the adoption of which was largely a way for psychologists to engage misinformation with educational interventions without having to read educational theory.

Check out the whole post for a quick review of the information you need to get ahead of LA fire misinformation.

Other minds

This podcast Peter Godfrey-Smith on Sentience and Octopus Minds (on Sean Carroll’s Mindscape) brilliantly showcases the value philosophy can bring to biology, and the value research in evolution and animal behaviour can bring to philosophy of mind. Mind expanding.

Inspiration via antagonism

Ted Gioia: You Don't Need a Mentor—Find a Nemesis Instead. Thought-provoking essay. Would anyone like to fund my start-up idea : a dating app, but for a nemesis. We match you with someone you can hate, driving you to new heights of inspiration in your chosen field of endeavour?

Boundary conditions

Wrote this about proactively managing your workload. The thought is that lots of people will tell you how to manage your TODO list, but what you really need is advice on how to stop things getting on to your TODO list in the first place. Mostly aimed at academics (and one academic in particular, me).

And finally…

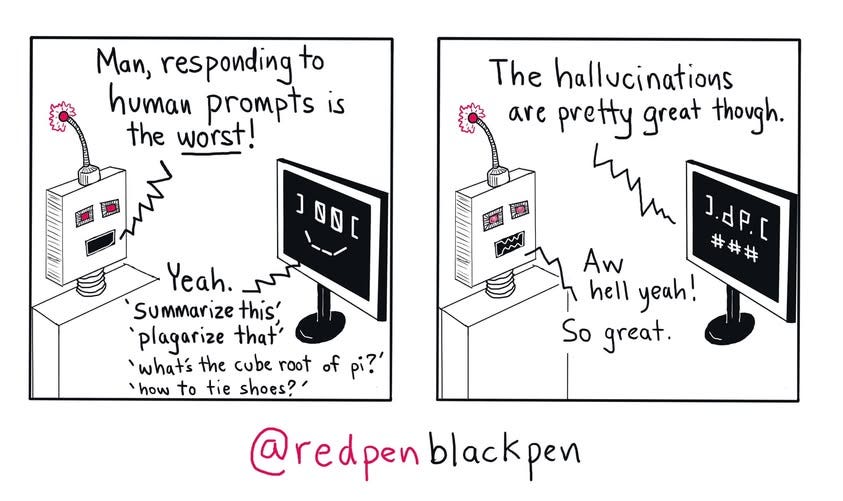

Source: redpenblackpen

END

Comments? Feedback? Suggested content warnings for this newsletter? I am tom@idiolect.org.uk and on Mastodon at @tomstafford@mastodon.online

Your point that community fact-checking has its limitations is self-evidently true. It does not infer however that the “fact-checking” that went on before did better. Actually, there is a good argument that it did significantly worse during the covid-19 epidemic. It was also significantly biased towards narratives agreeable to the left-wing establishment and excellent in calling everything inconvenient to the US Democratic Party “conspiracy theories”, on topics such as Biden’s mental fitness, his family’s business dealings, the lab leak theory etc. All in all, personally I applaud the win for free speech that the cancellation of these policies represent, while fully recognising that some speech is wrong and that the pendulum is probably going to swing wildly in the opposite direction. When push comes to shove I prefer decentralised policies over concentration of power.