The algorithmic heart of Community Notes

RP #58 What we know about the Fact Checking system Meta is adopting from Musk's X

Meta announced yesterday that they would move away from using professional fact-checkers on Facebook and Instagram (they also announced a set of changes to their moderation rules, allowing some content which would previously have been banned).

The move was widely greeted with concern.

BBC: Huge problems with axing fact-checkers, Meta oversight board says

Canadian CTV News: 'It’s just going to be a nightmare': Experts react to Meta’s decision to end fact-checking.

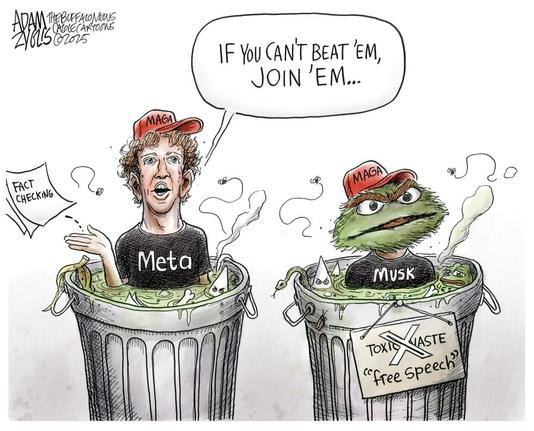

Among the concern was also derision:

Algorithm Watch: Zuckerberg Makes Meta Worse to Please Trump

And lots commentary and cartoons which were a lot ruder than this:

But what do we know about Community Notes, the fact-checking system Meta will adopt from X? And what do we know about how the algorithm at the heart of the Community Notes system actually works? That’s where we’re goingtoday - Let’s check the facts on this fact-checking set up!

Community Notes: the basics

Community Notes is a crowd-sourcing system. Users opt-in to submit notes on content which is posted on the platform. Other opt-in users vote on submitted notes, and when a threshold is reached that note appears to everyone on the platform, appended to the original content.

Here’s what it looks like1:

Community Notes, originally called Birdwatch, was launched in 2021 and rolled out platform wide in 2023 (Musk acquired Twitter in 2022). Wikipedia reports that Yoel Roth, Twitter the head of Trust and Safety until November 2022, stated the Community Notes system was originally intended to complement, not replace, professional moderation.

Community Notes: what we’ve learnt

The context for the deployment of Community Notes looms large - Musk’s takeover, his cost cutting, scaling back of Trust and Safety staff and infrastructure, bullishness over free speech and over AI, as well as the general anti-establishment milieu of US tech culture. The skeptical readings of Community Notes are legion: that is a cost effective smokescreen for neglecting responsibility for combating misinformation, and is hand-in-glove with providing a friendly environment for various right-wing talking points.

Regardless, and separate from whether it is a good solution for the problems with the platform, Community Notes is interesting in its own right. Crowd-sourcing has had some demonstrable successes, such as Wikipedia and prediction markets, and Community Notes uses an interesting new algorithm for determining which fact-checks to promote, one which promises to overcome political polarisation. Further, on X, the Community Notes algorithm, and the Notes themselves and their votes, are all open for inspection.

Research on Community Notes has shown a few things:

Community Note fact checks can be high quality. This study of covid related Notes in 2022-23 found 97% accuracy (according to medical professionals)

Community Notes, like other fact-checks, work. This study found that notes on inaccurate tweets reduce retweets by half, and increase the probability that the original author deletes the tweet by 80%. This mirrors research by Twitter’s own team.

Community Notes are too slow. Most sharing of misinformation is in the immediate hours after it is posted. Community Notes take time to be written and voted on, by which time - arguably - lots of the damage has been done. This report on misinformation about the Israel-Hamas conflict showed that relevant notes typically took more than seven hours to show up, with some taking as long as 70 hours.

Most notes aren’t seen, most misinformation doesn’t receive notes. Professional fact-checkers have been cool about the practical import of Community Notes, as summed up in this Poynter headline Why Community Notes mostly fails to combat misinformation. An analysis of the 2024 US Election by Alexios Mantzarlis and Alex Mahadevan concluded:

At the broadest level, it’s hard to call the program a success.

Only 29% of fact-checkable tweets in our sample carry a note rated helpful. And of the helpful notes, only 67% are assigned to a fact-checkable tweet. This is not the kind of precision and recall figures that typically get a product shipped at a big tech platform.

…

Zooming out, the picture doesn’t look any brighter. In the three days leading up to the election, fewer than 6% of the roughly 15,000 notes reached helpful status.

Crowd sourcing risks crowd-biases. This study found that more sources cited in Community Notes were left-learning, while this study analyses the risks that groups of bad faith actors can skew the algorithm by targeting which sources are counted as credible.

Speak, algorithm,

The current operation of Community Notes as a fact-checking system is one thing, but at the heart is the bridging algorithm which is designed to select quality notes, despite polarisation among the crowd of users providing and voting on notes. Algorithmic approaches to harnessing collective intelligence in an era of increasing tribalism deserve our attention. How does it work?

Jonathan Warden has written about the algorithm's implementation of the algorithm , as has Vitalik Buterin. If you want technical discussions I recommend them, and what I say is derived from reading them and is a significant simplification (errors are all mine, of course).

At heart, the bridging algorithm works by using Users’ voting behaviour across all Notes to weigh the votes on a specific Note. A typically crowd-sourcing algorithm will judge quality based on averaging or summing votes (i.e. the Note with the most upvotes would be judged best). The bridging algorithm doesn’t do this. Rather it tries to model why notes receive votes, using the population of all users and all their votes to identify clusters. If, for example, there are a bunch of conservative users who tend to upvote fact-checks on progressives, and a bunch of progressive users who tend to upvote fact-checks on conservatives, the algorithm will identify these clusters of votes and be able to predict that notes from certain users, on certain topics, are likely to win votes simply because of partisan leaning, not because of the factfulness of the content. So, under the conditions where there is polarisation, the algorithm can discount predictable votes - e.g. your left-wing fact check on some right-wing misinformation that is upvoted by other left-wing users.

This is more nuanced that you might imagine. It is NOT the case that the bridging algorithm boosts Notes which receive support from across the political spectrum. Rather - and I owe this point to Jonathan Warden - the algorithm boosts Notes which receive support despite, or disregarding, the political leaning of voting users. To drop in some technical language for moment, the algorithm attempts to discover latent factors in voting behaviour and then discount them. A further nuance, suggested by Warden, is that if the primary polarisation in the community of users is along some other dimension than political leaning (e.g between naive users and experts) the algorithm might discover this dimension instead and discount it (i.e. actively work to disregard expertise in votes!).

All the Wisdom of the World

Despite limitations, in speed and reach, and vulnerabilities to biases or abuse, Community Notes is a fundamentally legitimate approach to moderation. The community of users provide and vote on Notes. Making it clear why and how fact-checks appear engenders trust in platforms which are too often opaque or perceived as biased. The problems of polarisation, speech moderation and misinformation are bigger than any crowd-sourced approach alone can handle, but that’s not a flaw of Community Notes as a system in itself.

Large platforms may currently be using Community Notes as a smokescreen for neglect of their responsibilities to our information environment, but that shouldn’t stop us recognising the value and potential for future development here. Collective intelligence is enhanced when the wisdom of the crowd is structured and channeled. Making transparent that algorithms do this, and building in a commentary layer on top of the raw feed of social media posts, are both hugely positive steps.

More

Community Notes

Faked up

If you’re interested in this topic, you must read Faked Up, the newsletter from Fact-Check and Misinformation beat doyen Alexios Mantzarlis.

Research on Community Notes

Accuracy :

Allen, M. R., Desai, N., Namazi, A., Leas, E., Dredze, M., Smith, D. M., & Ayers, J. W. (2024). Characteristics of X (Formerly Twitter) Community Notes Addressing COVID-19 Vaccine Misinformation. JAMA. 2024;331(19):1670–1672. doi:10.1001/jama.2024.4800

Biases:

Saeed, M., Traub, N., Nicolas, M., Demartini, G., & Papotti, P. (2022, October). Crowdsourced fact-checking at Twitter: How does the crowd compare with experts?. In Proceedings of the 31st ACM international conference on information & knowledge management (pp. 1736-1746). https://doi.org/10.1145/3511808.3557279

Kangur, U., Chakraborty, R., & Sharma, R. (2024). Who Checks the Checkers? Exploring Source Credibility in Twitter's Community Notes. arXiv preprint arXiv:2406.12444.

Effectiveness:

Renault, T., Amariles, D. R., & Troussel, A. (2024). Collaboratively adding context to social media posts reduces the sharing of false news. arXiv preprint arXiv:2404.02803.

Wojcik, S., Hilgard, S., Judd, N., Mocanu, D., Ragain, S., Hunzaker, M. B., ... & Baxter, J. (2022). Birdwatch: Crowd wisdom and bridging algorithms can inform understanding and reduce the spread of misinformation. arXiv preprint arXiv:2210.15723.

Speed:

Bloomberg.com How Musk’s X Is Failing To Stem the Surge of Misinformation About Israel and Gaza 2023-11-21

Faked Up: X's Community Notes on Election Day

Algorithm

Jonathan Warden's posts about this

https://jonathanwarden.com/understanding-community-notes

https://jonathanwarden.com/multidimensional-community-notes/

And code for his implementation of the algorithm

https://github.com/social-protocols/bridge-based-ranking

Notes from Vitalik Buterin

https://vitalik.eth.limo/general/2023/08/16/communitynotes.html

Catch up

And finally…

Dragon on a Rock with Waters Beneath. Japan, late 19th-early 20th century.

Source. MFA Boston via @Sardonicus

END

Comments? Feedback? Algorithmic re-weighting which bridges across the divides within the soul? I am tom@idiolect.org.uk and on Mastodon at @tomstafford@mastodon.online

This is the first Community Note I could find, after searching for Trump, Biden and then Wildfires, and scrolling “Top”. It was much easier to find posts with “Grok” notes - AI generated context. But that’s another story.

I've spent the last 2 days looking for creators & content on substack that aligns with my current interests. I'm happy to say that I've now found one creator.

I am impressed with the amount of sources you referenced. I need to work on that.