A new study puts another nail in the coffin of "fake news"

Reasonable People #47: Mainstream stories have the lion's share of the impact on people's attitudes to the covid vaccine, shows analysis of massive dataset from Facebook

New work by Jenny Allen uses people’s intention to take the covid vaccine to test the impact of information and misinformation. Specifically, the study sets out to test the impact of the news flagged by professional fact-checkers as false or out-of-context.

Since 2016 there has been an explosive growth in misinformation research, and a large part of this focussed on “fake news”. To the extent that that the term means anything stable it means information which is demonstrably false, from sources with low standards and/or high partisan bias. Prior research has suggested that fake news has a limited reach - most news is consumed from trustworthy sources, and most fake news, when seen, isn’t shared (a tiny minority of online users share the majority of fake news). Despite these findings, the jury is still out. Some, like Sander van der Linden make the plausible claim that even marginal effects on a minority of the population can be dangerous - especially when the issues are things like knife-edge elections or life-or-death decisions during a pandemic.

Allen’s study uses the Social Science One dataset, which captures link sharing data from on Facebook, the Ground Zero of the misinformation panic. They argue that despite all the research around misinformation, nobody has provided a good handle on the real-world causal impact of misinformation. Lots has been measured about the information, but people haven’t managed to assess the size of the effects on the population at large. They set out to do this, reasoning that the impact of any information relies on two factors: (1) it has to be seen, and (2) it has to change behaviour.

Their first move, across two studies, was to test the effect of various headlines on vaccine intentions. Contrary to the idea that people reject new information, they found an influence of fake news: “We find that exposure to a single piece of vaccine misinformation decreased vaccination intentions by 1.5 percentage points on average”. This effect was the same for those who were initially positive or initially skeptical about the vaccine (side note: we found the mirror effect for vaccine-promoting information: just telling people about the safety and benefits of vaccines make their attitudes more positive). Negative impact on vaccine intentions was predicted by whether a headline suggests the vaccine could be harmful, independently of whether the headline was true or false (and independently of whether the headline was from a mainstream news source or not).

Next, Allen and team used the Social Science One data, finding 13,206 URLs which were about the COVID-19 vaccine, published during the initial rollout of the vaccine in the US (January -March 2021) and were shared publicly more than 100 times on Facebook.

Looking at how many times these headlines were seen, only a tiny proportion of views were of headlines flagged as false or misleading by fact-checkers (8.7 million, out of 2,700 million total views - 0.3%). Similarly, of all views, content from low-credibility sources was also a small proportion: 5.1%

These two steps provide the two kinds of information need to estimated causal effects: what is the effect when you see a headline AND what headlines were seen, and how often.

The results are clear: although news marked as fake is more likely to be negative about vaccines, and so degrade vaccine intentions when it is seen, the likely effects of fake news are completely swamped by the effects of mainstream news, and headlines which are vaccine skeptical - but not necessarily outright false - have a much larger aggregate effect on degrading the population intention to take the vaccine. As an example, Allen identified the single most view URL across their sample of 13,206. This was from the mainstream Chicago Tribune and was viewed by fully 20% of Facebook’s US population. The piece was titled “A Healthy Doctor Died Two Weeks After Getting a COVID vaccine; CDC is investigating why”, a completely factual report which nonetheless evokes the suspicion that the vaccine is harmful. URLs related to this story were viewed over 65 million times on Facebook, more than 7 times the number of views on all misinformation combined.

As the paper reports

"Although misinformation was significantly more harmful when viewed, its exposure on Facebook was limited. In contrast, mainstream stories highlighting rare vaccine deaths both increased vaccine hesitancy and were among Facebook’s most-viewed stories."

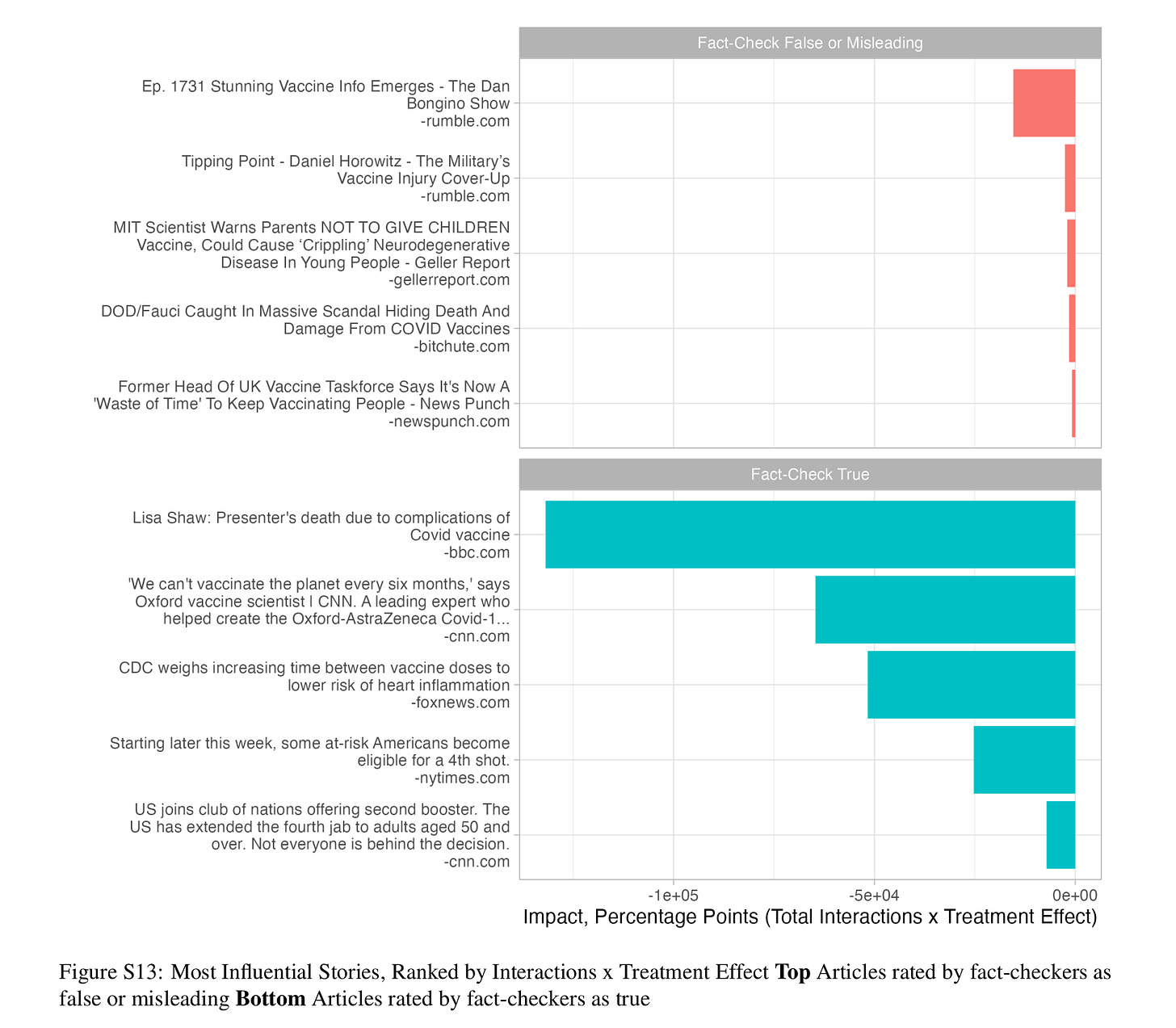

For me, the a key plot in this paper is in the supplemental material. This combines the estimate of the effect on vaccine intentions with the number of times a story was viewed to come up with an estimated “total impact”. In red (top part) is the impact of the most influential stories fact checked as false, the fake news. In blue (bottom part) is the impact of the most influential stories fact checked as true.

The plot makes clear that biggest impact comes from mainstream sources, sources like the BBC, CCN and NY Times. This puts into perspective the panic around fake news. In terms of influence, mainstream journalism holds the responsibility to not just report the truth, but be vary careful about how they report the truth. If the population is misinformed, it is on them, not fake news.

*

Two caveats. First, the research team is industry funded:

Meta and Google have an interest in downplaying the importance of fake news, so make of that what you will.

Second, the although the paper is framed around impact, they only measure attitudes not behaviour. The gold standard would be to see an effect on whether people actually showed up to get vaccinated, but for practical reasons this is very hard for any research team to measure, especially at scale, and especially linking to social media activity (which is not identified at the individual level by the Social Science One data set anyway).

*

The paper is under review at Science, so read it now in glorious 63 page PDF while you can.

Allen, J. N. L., Watts, D. J., & Rand, D. G. (2023, September 9). Quantifying the Impact of Misinformation and Vaccine-Skeptical Content on Facebook. https://doi.org/10.31234/osf.io/nwsqa

Catch-up service, misinformation theme:

PAPER: Universal principles in the repair of communication problems.

"no other animal communication system has a comparable clarification architecture" is how Olga Khazan in The Atlantic reports this study (Dingemanse et al 2015)

The study claims there are three fundamental forms of clarification ('other-initiated repair’), and these are

Open request: indicating you need clarification, something like "Huh?"

Restricted request: indicating what you need clarification about, something like"Who?"

Restricted offer: suggesting a clarification and asking for endorsement, something like "Jody did this?"

ABSTRACT: There would be little adaptive value in a complex communication system like human language if there were no ways to detect and correct problems. A systematic comparison of conversation in a broad sample of the world’s languages reveals a universal system for the real-time resolution of frequent breakdowns in communication. In a sample of 12 languages of 8 language families of varied typological profiles we find a system of ‘other-initiated repair’, where the recipient of an unclear message can signal trouble and the sender can repair the original message. We find that this system is frequently used (on average about once per 1.4 minutes in any language), and that it has detailed common properties, contrary to assumptions of radical cultural variation. Unrelated languages share the same three functionally distinct types of repair initiator for signalling problems and use them in the same kinds of contexts. People prefer to choose the type that is the most specific possible, a principle that minimizes cost both for the sender being asked to fix the problem and for the dyad as a social unit. Disruption to the conversation is kept to a minimum, with the two-utterance repair sequence being on average no longer that the single utterance which is being fixed. The findings, controlled for historical relationships, situation types and other dependencies, reveal the fundamentally cooperative nature of human communication and offer support for the pragmatic universals hypothesis: while languages may vary in the organization of grammar and meaning, key systems of language use may be largely similar across cultural groups. They also provide a fresh perspective on controversies about the core properties of language, by revealing a common infrastructure for social interaction which may be the universal bedrock upon which linguistic diversity rests.

Dingemanse, M., Roberts, S. G., Baranova, J., Blythe, J., Drew, P., Floyd, S., ... & Enfield, N. J. (2015). Universal principles in the repair of communication problems. PloS one, 10(9), e0136100.

PAPER "BCause: Reducing group bias and promoting cohesive discussion in online deliberation processes through a simple and engaging online deliberation tool"

I really enjoyed the design thinking that went into this platform/paper. Often the platforms we use are carefully designed, but for engagement (or other advertising-serving metrics), so it was a pleasure to read about what a platform could do to support deliberation. Specifically, they chose to

not collapse feedback between users to a single dimension (e.g. like number of “likes”)

support direct replies, retaining context (and maybe keeping discussions on topic)

Maybe it is a stretch, but I detect a sympathy here with Kevin Munger’s recent post about twitter and what it does to discourse

"The increased ease of “political” discussions on Twitter reduces “the political” into just one of many fandoms for the identity-conflict machine to weaponize. It is in this sense impossible to send a political tweet. "

If we really understand why some platforms make good discussion harder, we should be able to built platforms which make them easier. That’s why I find BCause interesting.

You can look at the platform here : bcause.app

Facilitating healthy online deliberation in terms of sensemaking and collaboration of discussion participants proves extremely challenging due to a number of known negative effects of online communication on social media platforms. We start from concerns and aspirations about the use of existing online discussion systems as distilled in previous literature, we then combine them with lessons learned on design and engineering practices from our research team, to inform the design of an easy-to-use tool (BCause.app) that enables higher quality discussions than traditional social media. We describe the design of this tool, highlighting the main interaction features that distinguish it from common social media, namely: i. the low-cost argumentation structuring of the conversations with direct replies; ii. and the distinctive use of reflective feedback rather than appreciative-only feedback. We then present the results of a controlled A/B experiment in which we show that the presence of argumentative and cognitive reflective discussion elements produces better social interaction with less polarization and promotes a more cohesive discussion than common social media-like interactions.

Lucas Anastasiou and Anna De Libbo. 2023. BCause: Reducing group bias and promoting cohesive discussion in online deliberation processes through a simple and engaging online deliberation tool. In Proceedings of the First Workshop on Social Influence in Conversations (SICon 2023), pages 39–49, Toronto, Canada. Association for Computational Linguistics.

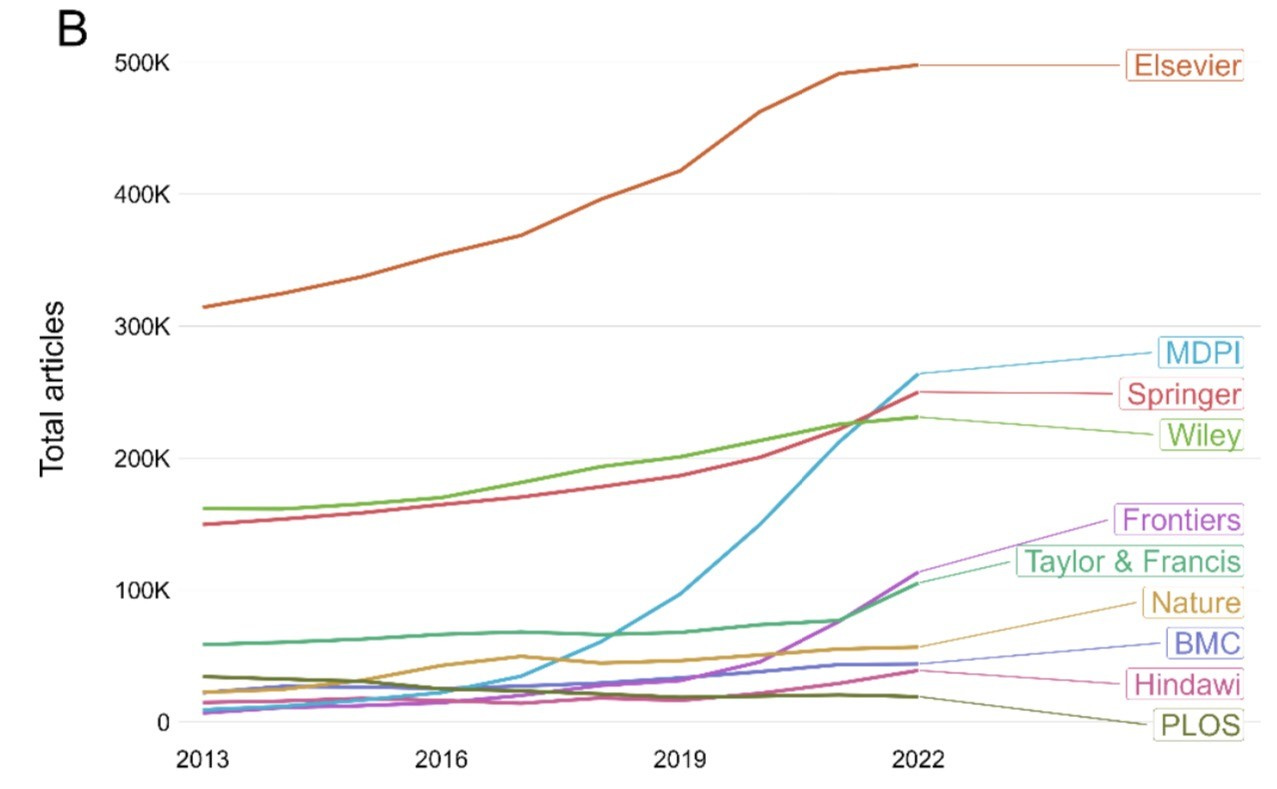

PREPRINT: ‘The strain on scientific publishing’

2016: 1.9 million articles

2022: 2.8 million articles

A threat to our ability for large-scale collective intelligence, or a boon?

Abstract

Scientists are increasingly overwhelmed by the volume of articles being published. Total articles indexed in Scopus and Web of Science have grown exponentially in recent years; in 2022 the article total was 47% higher than in 2016, which has outpaced the limited growth, if any, in the number of practising scientists. Thus, publication workload per scientist (writing, reviewing, editing) has increased dramatically. We define this problem as the strain on scientific publishing. To analyse this strain, we present five data-driven metrics showing publisher growth, processing times, and citation behaviours. We draw these data from web scrapes, requests for data from publishers, and material that is freely available through publisher websites. Our findings are based on millions of papers produced by leading academic publishers. We find specific groups have disproportionately grown in their articles published per year, contributing to this strain. Some publishers enabled this growth by adopting a strategy of hosting special issues, which publish articles with reduced turnaround times. Given pressures on researchers to publish or perish to be competitive for funding applications, this strain was likely amplified by these offers to publish more articles. We also observed widespread year-over-year inflation of journal impact factors coinciding with this strain, which risks confusing quality signals. Such exponential growth cannot be sustained. The metrics we define here should enable this evolving conversation to reach actionable solutions to address the strain on scientific publishing.

Hanson, M. A., Barreiro, P. G., Crosetto, P., & Brockington, D. (2023). The Strain on Scientific Publishing. https://arxiv.org/abs/2309.15884

Not reasonable: MyHouse.WAD

I am not normally the kind of person who spends nearly two hours watching a review of a user-built level of the classic first person shooter DOOM, but this hits the spot between 90s computing nostalgia and knowing references to postmodern literary fiction, with a great pacing of suspense and revelation.

And finally…

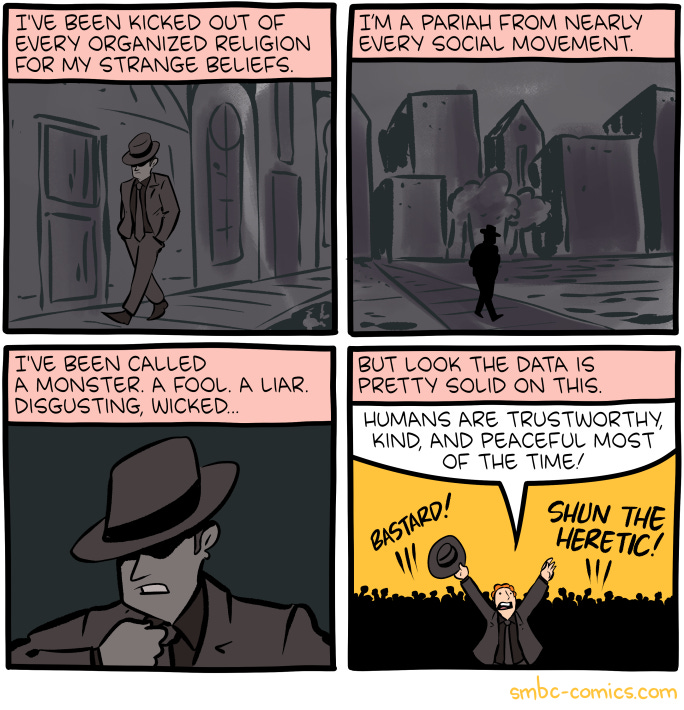

“Heretic” by SMBC

END

Comments? Feedback? Just asking questions? I am tom@idiolect.org.uk and on Mastodon at @tomstafford@mastodon.online

Something more concerning, to me at least, than individual fake news stories like the ones studied, is the rise of media channels which incorporate these into their ongoing narrative - I'm thinking in particular of the Joe Rogan and Russell Brand podcasts, though GB News may well fit into a similar category (I don't know, I've avoided it as much as possible). I see very little sharing of fake news stories on social media, but I do have conversations with loved and respected friends who appear to me to have been somewhat "radicalised" by their podcast choices.