Faking survey responses with LLMs

Survey research is a key mechanism by which society knows itself, we should all be worried if can't be trusted.

What if the mirror held up to society is being manipulated? An explosive paper published yesterday shows how successful Large Language Models can be at fraudulently completing online surveys. The paper is framed in terms of the consequences for research and researchers, but I make the case that it is far more serious than that.

404 Media reports: A Researcher Made an AI That Completely Breaks the Online Surveys Scientists Rely On:

Online survey research, a fundamental method for data collection in many scientific studies, is facing an existential threat because of large language models, according to new research … The author … Sean Westwood, created an AI tool he calls “an autonomous synthetic respondent,” which can answer survey questions and “demonstrated a near-flawless ability to bypass the full range” of “state-of-the-art” methods for detecting bots.

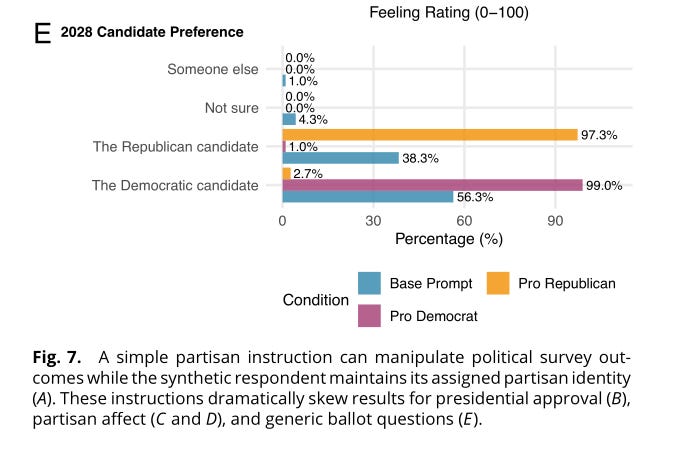

It’s actually possibly worse than this opening paragraph suggests. The checks for AI responding are very good (and completely useless at detecting Westwood’s bot). He shows that the same approach works with a variety of base LLMs. And he shows that as well as convincingly impersonating real survey respondents, the bot can often correctly infer a study’s hypotheses, as well as be instructed to produce responses consistent with a particular overall outcome. This means an army of bots could be engineered to make survey results look, say, like the public favoured military action against a specific nation (or not), or favoured a particular candidate for the next presidency (or not).

This last case is illustrated in this figure from the paper:

Our skepticism of online research should already be high. This tips the scales - default distrust is now a reasonable judgement.

Surveys and opinion polls are key tools in how our collective views are represented and reflected back to us. The media reports the polls - This is what people think - and as intensely social creatures it is natural for us adjust our own beliefs and attitudes in response. There are multiple reasons for this - not only do other people sometimes have information you don’t (so it is reasonable to weigh their opinion against your own, even if you strongly believe you are right), but there are also advantages to coordinating your beliefs with other people’s (an example is beliefs such as those about which side of the road to drive on. There isn’t some kind of eternal truth about whether driving on the right or the left is correct, but it is essential you believe the same as other people you share the roads with). Many examples in the realm of manners and social acceptability, rules about what you can say or do, and how you should say or do it, represent similar coordination problems.

If the machinery of societal self-awareness is unreliable, then that represents a powerful lever. We already know that people are drawn to believing other people are less rational and more easily influenced than themselves. If bad actors can manipulate surveys so that people seem worried about an issue (like migration) or on the verge of taking some action (like wanting to indict, or not indict, the president) then this may move us all to protest (or not protest) or to support (or oppose) decisive action by the government.

What we believe other people believe matters. This is the topic of Steven Pinker’s new book on Common Knowledge (“When everyone knows that everyone knows”). It is the reason adverts during the Superbowl cost so much and why newspapers love celebrity stories.

Surveys are a key part of the machinery for generating common knowledge. So is social media, and it is arguable that part of the contemporary derangement of public life is due to the fact that social media presents such a distorted view of what is important. A prime example is X/Twitter, which had strong buy-in from elites - at one point it was unthinkable that a politician or journalist would not be on Twitter - yet what happens there is extremely unrepresentative. Only a tiny percentage of the actual population are on X (it is one of the smaller large social media platforms), and only a tiny fraction of those users actually post any original content, and that content is sorted and promoted by an algorithm which prioritises controversial and provocative posts. No wonder some, like Will Jennings, are making the case that it is irresponsible for those in public life to be on the platform, arguing It’s time the British government got off X.

With social media already seriously unrepresentative, we don’t want to lose social research as a way to hold up a true mirror to the views of society. This new study makes clear just how much of a threat LLMs represent to the accuracy of survey research. The issue is of concern to all of us, not just survey researchers.

404 media: A Researcher Made an AI That Completely Breaks the Online Surveys Scientists Rely On

Westwood, Sean J. (2025). The potential existential threat of large language models to online survey research. Proceedings of the National Academy of Sciences. https://doi.org/10.1073/pnas.2518075122

This newsletter is free for everyone to read and always will be. To support my writing you can upgrade to a paid subscription (more on why here)

Keep reading for references and further reading on social media and far right rhetoric

CATCH-UP

Recently from me:

When less (communication) is more (collective intelligence): Team work, complex problems, and preserving diversity in the ecosystem of ideas

Listening to Tommy Robinson: What did I learn by giving the right-wing activist my ears for 90 minutes?

Helping people spot misinformation: And the greatest gift psychology gave the world

The Ideological Turing Test: Do you truly understand those you disagree with?

Community Notes require a Community: How we used a novel analysis to understand what causes people to quit the widely adopted content-moderation system

PODCAST: What if we had fixed social media?

An alternate history which asks us to imagine how we might have changed the design patterns in the early days of social media so that it supported society and human flourishing. Optimism is a bit cringe, which itself tells you something, but it is a great exercise and I found the basic idea of “it wasn’t that hard” to fix things inspiring.

Link: What if we had fixed social media?

PODCAST: Rivers of Blood – How Enoch Powell poisoned Britain

A good complement to my recent post on Listening to Tommy Robinson, which highlights how much of Robinson’s shtick is tried-and-true tropes from the far right, back to Enoch Powell and his famous 1968 speech. Here’s a partial list of those tropes from the transcript:

What comes out of the rivers of blood speech?

Play along at home next time you hear an interview with Robert Jenrick or people like that.

First, the speaker claims to represent ordinary Brits who are ignored by the liberal elites and falsely accused of racism for expressing their legitimate concerns, simply saying what everyone’s thinking.

Two, the use of exaggerated statistics. Not made up, but vastly distorted.

Three, the use of alarming anecdotes about immigrants mistreating white people as if that is the normal way of things.

Four, the claim that immigrants are depriving white Brits of access to schools, hospitals, and social services, often made by people who support massive cuts to public spending.

…

[Five] The claim that immigrants, in fact, have more advantages due to inverse racism, making whites a persecuted minority, that’s a quote, and strangers in their own country.

They get up to eight. It’s an instructive list, but I have a hesitation. Dissecting the tropes used by the far right will do precisely nothing to persuade anyone who is open to persuasion by the likes of Robinson. I can’t see someone who feels ignored by liberal elites reacting to being told that Robinson plays on this trope in any other way that to say “He says it because it’s true! He says we have legitimate concerns and are ignored by liberal elites because we are!”.

My conclusion is still that alternative stories are needed, but that is easier said than done.

Origin Story: Rivers of Blood – How Enoch Powell poisoned Britain

…And finally

Stephen Collins: “Every ad now”

Original: @stephencollins.bsky.social

More at : stephencollinsillustration.com

END

Comments? Feedback? And I still need the anti-venom for far-right rhetoric, so if you have that I am tom@idiolect.org.uk and on Mastodon at @tomstafford@mastodon.online

![[Scene is a kitchen - a middle aged woman called JANET is boiling peas at the stove.]

JANET: Ugh...

LIZ: What's up?

JANET: I am so bored of cooking peas!

LIZ: Have you tried... AI peas?

JANET: AI peas?

LIZ: They're peas with AI! [Liz holds up to us a packet of peas labelled: Pea-i AI - Peas with AI].

LIZ: Al-powered peas harness the potential of your peas

JANET: What

LIZ [Now a voiceover as we cut to a whizzy technology diagram of peas all connected by meaningless dotted lines] Why not take your peas to the next level with Al Peas' new Al tools to power your peas? [Show a techno diagram of a pea with a label reading 'AI' pointing to a random zone in it]

LIZ: Each pea has Al in a way we haven't quite worked out yet but it's fine [Show Janet and Liz now in a Matrix-style world of peas]

LIZ: With Al peas you can supercharge productivity and make AI work for your peas!

JANET: What

LIZ: Shut up

LIZ: Our game-changing Pea-Al gives you the freedom to unlock the potential of the power of the future of your peas workflow From opening the bag of peas to boiling the peas to eating the peas To spending millions on adding Al to the peas and then having to work out what that even means.

JANET: Is it really necessary to-

LIZ [Grabbing Janet by the collar]: THE PEAS HAVE GOT AI, JANET

[Cut to an advert ending screen, with the bag of peas and the slogan: AI PEAS: Just 'Peas' for god's sake buy the AI peas. [Ends] [Scene is a kitchen - a middle aged woman called JANET is boiling peas at the stove.]

JANET: Ugh...

LIZ: What's up?

JANET: I am so bored of cooking peas!

LIZ: Have you tried... AI peas?

JANET: AI peas?

LIZ: They're peas with AI! [Liz holds up to us a packet of peas labelled: Pea-i AI - Peas with AI].

LIZ: Al-powered peas harness the potential of your peas

JANET: What

LIZ [Now a voiceover as we cut to a whizzy technology diagram of peas all connected by meaningless dotted lines] Why not take your peas to the next level with Al Peas' new Al tools to power your peas? [Show a techno diagram of a pea with a label reading 'AI' pointing to a random zone in it]

LIZ: Each pea has Al in a way we haven't quite worked out yet but it's fine [Show Janet and Liz now in a Matrix-style world of peas]

LIZ: With Al peas you can supercharge productivity and make AI work for your peas!

JANET: What

LIZ: Shut up

LIZ: Our game-changing Pea-Al gives you the freedom to unlock the potential of the power of the future of your peas workflow From opening the bag of peas to boiling the peas to eating the peas To spending millions on adding Al to the peas and then having to work out what that even means.

JANET: Is it really necessary to-

LIZ [Grabbing Janet by the collar]: THE PEAS HAVE GOT AI, JANET

[Cut to an advert ending screen, with the bag of peas and the slogan: AI PEAS: Just 'Peas' for god's sake buy the AI peas. [Ends]](https://substackcdn.com/image/fetch/$s_!80s_!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fe08dcbd5-f8c6-4e78-9321-535903b80d4d_1080x1350.jpeg)